OpenAI's new defense contract completes its military pivot

Source: MIT Technology Review

-snip-

Today, OpenAI is announcing that its technology will be deployed directly on the battlefield.

The company says it will partner with the defense-tech company Anduril, a maker of AI-powered drones, radar systems, and missiles, to help US and allied forces defend against drone attacks. OpenAI will help build AI models that “rapidly synthesize time-sensitive data, reduce the burden on human operators, and improve situational awareness” to take down enemy drones, according to the announcement. Specifics have not been released, but the program will be narrowly focused on defending US personnel and facilities from unmanned aerial threats, according to Liz Bourgeois, an OpenAI spokesperson. “This partnership is consistent with our policies and does not involve leveraging our technology to develop systems designed to harm others,” she said. An Anduril spokesperson did not provide specifics on the bases around the world where the models will be deployed but said the technology will help spot and track drones and reduce the time service members spend on dull tasks.

OpenAI’s policies banning military use of its technology unraveled in less than a year. When the company softened its once-clear rule earlier this year, it was to allow for working with the military in limited contexts, like cybersecurity, suicide prevention, and disaster relief, according to an OpenAI spokesperson.

-snip-

Amazon, Google, and OpenAI’s partner and investor Microsoft have competed for the Pentagon’s cloud computing contracts for years. Those companies have learned that working with defense can be incredibly lucrative, and OpenAI’s pivot, which comes as the company expects $5 billion in losses and is reportedly exploring new revenue streams like advertising, could signal that it wants a piece of those contracts. Big Tech’s relationships with the military also no longer elicit the outrage and scrutiny that they once did. But OpenAI is not a cloud provider, and the technology it’s building stands to do much more than simply store and retrieve data. With this new partnership, OpenAI promises to help sort through data on the battlefield, provide insights about threats, and help make the decision-making process in war faster and more efficient.

-snip-

Read more: https://www.technologyreview.com/2024/12/04/1107897/openais-new-defense-contract-completes-its-military-pivot/

Faster, yes, if they don't check the AI's results.

More efficient? If that's defined as turning decision-making over to AI.

But this is generative AI, with all the errors and hallucinations found in this type.

The same type of AI that's usually offered with warnings to users about it being error prone, so its results shouldn't be trusted, especially for anything important.

Mike 03

(17,542 posts)It seemed to happen so quickly. And on the one hand we had all these warnings, often from the inventors, but also the corporations themselves. But they acted like it was beyond their control whether to release it or not. And then as more came out about the behind the scenes drama at Open AI, it appeared that some people there were in a rush to monetize the thing--to get their big payday.

My interpretation is that they knowingly released something they had grave doubts about because they wanted the money. Now, there could be logical reasons--maybe a bunch of companies were ready to release it all at the same time, and the companies to do so first would make the most money.

I don't know enough about it to form a strong opinion, but I strongly suspect this was another decision made in haste that we will come to regret, probably fairly soon. And these corporations will justify it by saying we (the public) couldn't wait to have this technology. "The decision to release or not release AI was not in our hands, it was beyond our control."

intrepidity

(7,945 posts)the risk was that China would do something first, I think.

Not sure that was good reasoning, but I've heard it.

LudwigPastorius

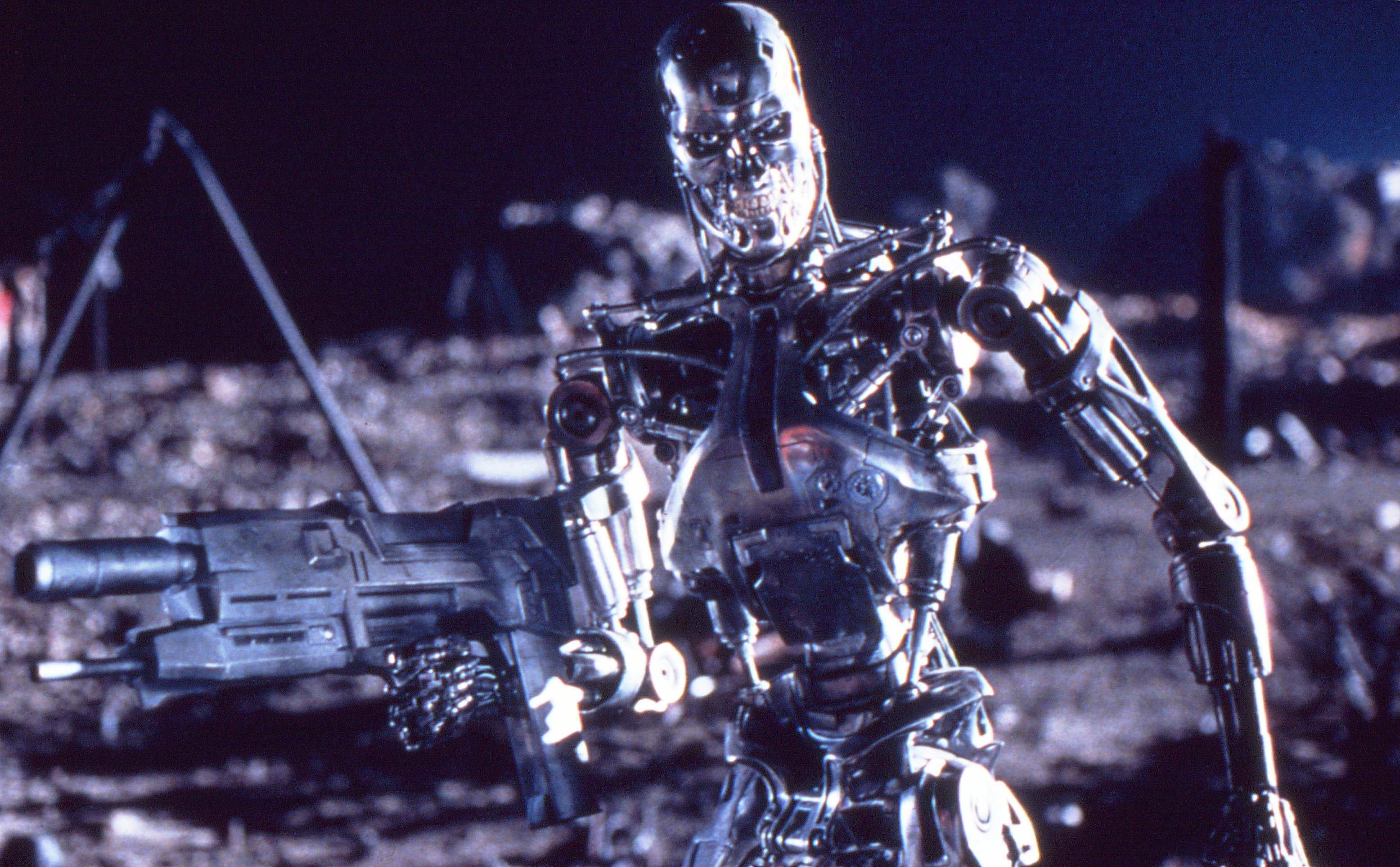

(11,209 posts)That is all.

speak easy

(10,766 posts)speak easy

(10,766 posts)